vSphere ESXi Agent (EAM) Upgrade Failed

![]()

We recently updated our vCenter Certificate and everything seems to have worked well as per UI. Our monitoring platform never picked up an error that was occurring under the /vmware/log/eam/eam.log either that came into prominence as we tried upgrading our SRM environment to v9. The SRM upgrades did go to the plan, but the cluster remediation checks would fail on an error as shown and the cluster image would never be in compliance again.

Health Check Failed to retrieve data about service ‘Cluster_01’ on vSphere ESXi Agent Manager. Verify that the Service ‘Cluster_01’ is running and try again.

Problem

The above indication is that the vCLS (cluster service VM) is not healthy and for some reason those cluster VM’s has got itself into a degraded state even though it shows as the state of the cluster is healthy form UI and thus won’t allow the image to verify compliance. For our specific case, SRM HBR Agent had to be pushed via the vRLCM and the scenario was that it would push the agent out to one host in the cluster and fail on the remaining. As you go through the esxupdate.log on the ESXi and vCenter logs, you can see the errors showing up on the host that the vRLCM is failing to pass through. Attempts to remove the VIB manually from the host and rerunning the compliance check would still result in the cluster compliance checks showing vSphere ESXi Agent (EAM) upgrade failed error.

There are several workarounds suggested resetting the vRLCM database etc but that wouldn’t solve the above issue.

Browse to the /vmware/log/eam/eam.log, and you can find three error messages in there.

- EAM is still loading from database

- Failed to log in to vCenter as extension. vCenter has probably not loaded the EAM extension.xml yet. Cannot log in due to incorrect username or password

- Failed to authenticate extension.com.vmware.vim.eam to vCenter.

The above can occur due to a discrepancy between the vpxd-extension certificate stored, and the certificate information stored in the vCenter Server database for the EAM extension.

Fix for the Issue

Step 1 – Establish an SSH session to the vCSA and run the command to retrieve the vpxd-extension solution user certificate and key

Step 2 – Verify if there are Issues around Primary Network Identifier, if not move to step 6

We could encounter an issue if the PNID Changing the Primary Network Identifier (PNID) on vCenter so in most cases what happens is the vCenter is deployed via a hostname rather than the FQDN or at times we can see case-sensitive nature of the VMware vCenter Server Appliance (VCSA) and the Common Name (CN) in the certificates. E.g.: If the vCenter is deployed in lowercase, the request for certificate, the CSR should also be using the lowercase fully qualified domain name, so the principle is s that the case needs to match, lowercase host name needs to match a lowercase CN and the opposite applies as well. An uppercase host name needs to match an uppercase CN.

Step 3 – Check the current value of the Primary Network Identifier (PNID) with the following command.

/usr/lib/vmware-vmafd/bin/vmafd-cli get-pnid --server-name localhostStep 4 – Set the pnid value to the same name, but change the changing the case as required.

/usr/lib/vmware-vmafd/bin/vmafd-cli set-pnid --server-name localhost --pnid <pnid>Step 5 – Verify if the changes are applied, if not go Home > Inventory > vCenter > Configure > Settings > General > Edit > Runtime settings and change the vCenter Server Name and then click Save, and reboot the appliance

Step 6 – mkdir /certificate

Step 7 – Run the below command

/usr/lib/vmware-vmafd/bin/vecs-cli entry getcert --store vpxd-extension --alias vpxd-extension --output /certificate/vpxd-extension.crt

/usr/lib/vmware-vmafd/bin/vecs-cli entry getkey --store vpxd-extension --alias vpxd-extension --output /certificate/vpxd-extension.keyStep 8 – To update the extension’s certificate with vCenter Server, run the below command

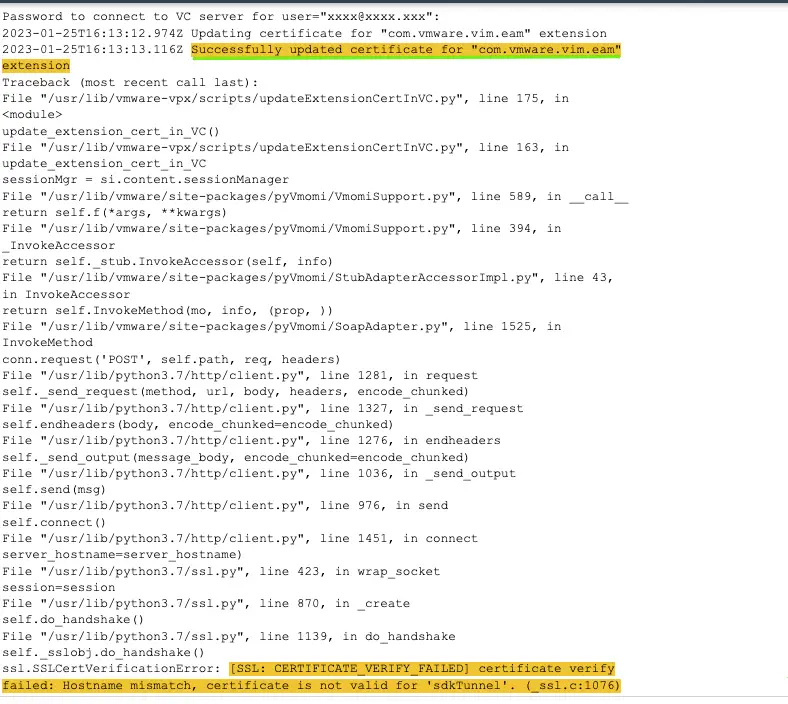

python /usr/lib/vmware-vpx/scripts/updateExtensionCertInVC.py -e com.vmware.vim.eam -c /certificate/vpxd-extension.crt -k /certificate/vpxd-extension.key -s <PNID/FQDN of vCenter Server> -u Administrator@vsphere.localStep 9 –Finally restart the VMware ESX Manager service

service-control --stop vmware-eam && service-control --start vmware-eamStep 10 – As you do this, we can now see the output of eam.log showing us a successful update of the eam extensions. The below error can be safely ignored

Step 11 – Under your cluster > vSphere Cluster Services > Health, check the cluster VM changing status from degraded to healthy.

Step 12 – Your compliance checks on the lifecycle manager will start working okay from this point.

A python script as shown in KB 90561 that does the same job.

References

https://kb.vmware.com/s/article/80588

https://kb.vmware.com/s/article/2123631?lang=en_US&queryTerm=2123631

https://kb.vmware.com/s/article/79892?lang=en_US&queryTerm=79892

https://kb.vmware.com/s/article/94934?lang=en_US&queryTerm=94934

https://kb.vmware.com/s/article/2112577?lang=en_US&queryTerm=2112577

https://kb.vmware.com/s/article/90561?lang=en_US&queryTerm=90561