IBM Spectrum Scale is part of a suite of Software defined storage products that provides a unified interface for storage management.

The Spectrum Scale (GPFS/TigerShark) is a high-performance object storage scale-out clustered file system for managing unstructured files developed by IBM that provides concurrent access to a file . It can be deployed in shared disk configurations allowing us to configure high available file system allowing concurrent access from a cluster of nodes thus offering parallelism and guaranteed availability at all times.

Overcoming Single-Point-of-Failure in Traditional NFS

In a typical NFS server model, each client connects to a single NFS server to access data. While simple, this setup has a major drawback: the server is a single point of failure. If the server goes down, clients lose access to their data, and performance can become a bottleneck.

How IBM Spectrum Scale Solves This

IBM Spectrum Scale eliminates this issue by spreading data across multiple nodes, called NSD nodes. Key benefits of this architecture include:

- No single point of failure: If one node goes down, other nodes continue serving data.

- Maximum throughput: Data can be read and written in parallel across multiple nodes.

- Scalable capacity: Adding more NSD nodes increases storage capacity and performance simultaneously.

By distributing both data and access across multiple NSD nodes, Spectrum Scale provides high availability, high performance, and true scalability, addressing the limitations of traditional NFS systems.

IBM Software based Storage Solutions

The IBM’s key software based storage solutions in the market today are;

Quick Reference Table

| Product | Purpose / Focus | Environment | Key Features |

|---|---|---|---|

| Storage Scale | High-performance file management | Hybrid / Cloud / On-prem | Clustered file system, container-native, intelligent tiering |

| Storage Virtualize | Software-defined storage | On-prem / Hybrid | 500+ storage systems, integrates with FlashSystem, mirroring |

| Virtualize for Public Cloud | Cloud storage virtualization | AWS / Azure / IBM Cloud | Runs on EC2 & bare metal, virtualizes cloud block storage |

| Storage Insight | Monitoring Platform | On-prem / Hybrid | Performance tracking, storage reporting, cost optimization |

| Storage Archive | Tape-based data access | On-prem | LTFS-based, simple access, Single Drive/Library/Enterprise editions |

| Storage Protect | Backup & data resilience | Hybrid | Scalable, integrates with tape/cloud/object storage, cyber-resilient |

| Protect Plus | Modern data protection | Hybrid / Cloud | SLA-based automation, IBM Cloud integration, RBAC & REST APIs |

| Storage Control | Monitoring & analytics | Multi-vendor | Performance tracking, storage reporting, cost optimization |

| Storage Discover | AI-ready data catalog | OpenShift / Hybrid | Real-time indexing, exabyte-scale, OpenShift integration |

| Cloud Object Storage (COS) | Scalable unstructured storage | Cloud & On-prem | 15 nines reliability, TBs to exabytes, container-optimized |

Storage Scale: Smarter File Management

IBM Storage Scale is built for big, messy, unstructured data. Whether you’re dealing with massive analytics datasets or running AI workloads, this clustered file system keeps everything organized. It also works seamlessly with Kubernetes and hybrid cloud apps, so developers and IT teams can access the data they need without fuss.

Storage Virtualize & Virtualize for Public Cloud: One Storage to Rule Them All

Ever wish you could manage dozens of different storage systems as if they were one? That’s exactly what IBM Storage Virtualize does. It supports hundreds of storage arrays and integrates with IBM FlashSystem hardware.

The public cloud version extends this magic to AWS, Azure, and IBM Cloud — letting you replicate and mirror data between on-prem and cloud effortlessly. Your data, wherever it lives, stays connected and manageable.

Storage Archive: Tape Made Simple

Yes, tape storage still exists, and yes, it’s still useful for long-term backups. IBM Storage Archive takes the headache out of tape management. Using the Linear Tape File System (LTFS), you can read, write, and organize data without juggling extra software. Choose from Single Drive, Library, or Enterprise editions depending on your needs.

Storage Protect & Protect Plus: Peace of Mind for Your Data

Backups and data resilience are no longer optional. IBM Storage Protect can handle billions of objects, moving data to tape, cloud, or object storage. Meanwhile, Protect Plus is more modern — think VM, database, SaaS, and container protection, all automated and policy-driven. It even integrates with IBM Cloud Object Storage and has security baked in.

Storage Control: Keep Everything in Check

Managing storage across multiple vendors can be a nightmare. IBM Storage Control gives you a clear view — from departments to individual apps — and tracks performance, capacity, and costs. It’s like having a dashboard for your entire storage ecosystem.

Storage Discover: Organize Data for AI

Data is only useful if you can find it. IBM Storage Discover catalogs and indexes your files and objects in real time, even at exabyte scale. It works beautifully in Red Hat OpenShift environments, making containerized AI workloads easier to manage.

Cloud Object Storage (COS): Scale Without Worry

Finally, IBM Cloud Object Storage is the go-to solution for large-scale, unstructured data. It’s highly reliable, scalable from terabytes to exabytes, and optimized for cloud and container workloads. In short: your data is always available, safe, and ready to use.

Features of Scale System

- Storage Tiering

- Container Native Storage

- Data replication

- Policy Based Storage Management

- Multi-site operations

All GPFS management utilities are located under the /usr/lpp/mmfs/bin/ directory and commands typically start with mm(multimedia)

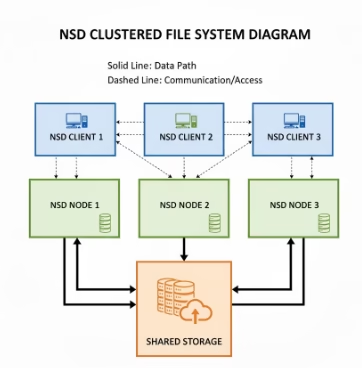

The NSD Model of Spectrum Scale

The architecture of IBM Spectrum Scale is built around Network Shared Disks (NSDs) and parallelism, which together enable high performance, scalability, and efficient access to unstructured data.

Network Shared Disks (NSDs)

- NSD Nodes: A node is called an NSD node when it provides access to storage for other nodes in the cluster. These nodes act as the storage access points.

- NSD Clients: Other nodes, called NSD clients, request data from the NSD nodes. The NSD node serves the requested data, enabling efficient data sharing across the cluster.

Parallelism

This breaks data and put its on all storage systems thus offering parallel data access across multiple NSD nodes and disks. This ensures:

- High throughput for large-scale workloads

- Low latency access even under heavy demand

- Scalable performance as more nodes or disks are added

The advantage of parallelism is that unlike the traditional way data is fetched sequentially, here data can be be obtained in parallel way offering a very high bandwidth reducing response times.

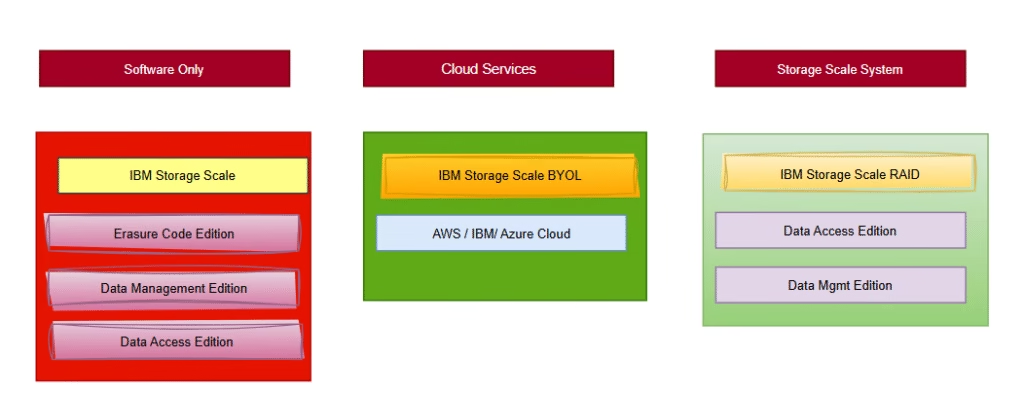

Software Deployment Options

The Spectrum Scale can be deployed in three ways

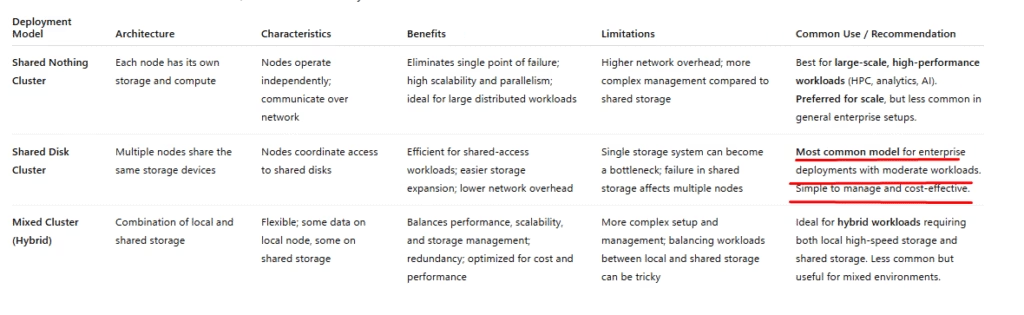

Deployment Options of NSD

The NSD can be deployed in 3 ways and the most commonly used one is the shared disk cluster model.

Diagram showing NSD clients, NSD nodes, and shared storage

There exist another deployment model IBM Elastic Storage Server which is covered in our next blog.