ISCSI device shows as Not Consumed

We recently ran into an odd issue in our VMware estate (6.7U3) that runs on ISCSI backed datastores. Datastores mapped to a few hosts in a cluster shows as “Not Consumed”. A reboot of the host thus shows the ESXi host will get stuck a “vflash loaded successfully” error and the host won’t come back online quickly.

Problem

A bunch of new servers with matching HLU/LLU was provisioned from an ISCSI SAN – ( Dell ME PowerVault ) . Powervault is entry-level storage, yet packed with tremendous features that typically will be seen in midrange arrays. The array has VAAI dependence and in our case, we use the san as extra storage just for EMC Networker backups. One of the VMware snapshot removals failed as per the backup tool which potentially could have triggered the much wider problem with the datastore moving into a Not Consumed state.

Inspection

The ISCSI device shows up in the storage devices section of the vSphere client but the datastore displays “Not Consumed”.

vFlash loaded succesfully boot error

A reboot of the server results in this condition

Problem

As the datastore behaviour turns into a non-consumed state, the reboot will just wait for the VMFS file system to be detected on the datastore and thus the host won’t come back online. It could typically take around 90 mins for the host to be back online and when it does come back online the hostd would have crashed and the ESXUI wont function.

Inspection of VMkernel.log

On inspection you will see the host is constantly trying to attach to a datastore and it won’t be able to get this datastore and vmkernel shows as “path in doubt”.

zcat vmkernel.*.gz | grep doubt

or to see all datastores affected

zcat vmkernel.*.gz | grep doubt | awk {‘print $ 7’} | sort | uniq -c

We had escalated to VMware support but they pointed us to the storage vendor referring to the logs that show that the frames between the two were being dropped.

Temporary Workaround to get the host back online

What happens as you detach the lun from the storage then?

The output from the vmkernel.log will show you the datastore that has the problem and the one we need to fix. As you detach the lun from san, the lun will be unmapped but you won’t be able to mount the datastore back to the host. This is a quick fix to get the host back online just in case if the host is stuck with the vflash loaded successfully error.

Follow this guide to detach the lun from san

Solution

To verify if there are any VM locks on your datastore, run this command;

localcli vm process list

To kill the VM process ID if anything, run this command;

esxcli vm process kill -t soft -w 9505664

If there are no process locks from a VM, proceed to the next step.

MTU Mismatch

- Verify if all hosts/storage ISCSI ports/network ports have 9000 (jumbo frames). The MTU should be consistent across the entire topology. If our case, MTU on the hosts that were consistent across so that certainly wasnt the trick to solve it. This still is one common error that would cause the datastore to turn into a non consumed state.

To verify MTU mismatch, run this command ;

vmkping -I <vmkernelIP of ISCSI adapter> <StorageArrayPortIP> -s 8270

eg: vmkping -I vmk3 192.168.20.155 -s 8270

If there is no MTU issue, proceed to the next step.

Upgrade Server Firmware

- With the above done, the next step will be to upgrade the firmware on the server . We had Dell PowerEdge servers so we got to the latest firmware and upgrade it. There is often a step being missed in this server firmware upgrade process. You will need to work with the server vendor to certify if the latest firmware they are suggesting is supported by VMWare.

Use Certified I/O Drivers and Matching OEM Firmware

- Ensure you go to the VMware I/O Compatiblity guide and this guide should help with you that. Upgrade/Downgrade a VIB/Driver in ESXi 7 using ESXCLI

VMFS Locking Mechanisms

In a shared storage environment, when multiple hosts access the same VMFS datastore, specific locking mechanisms are used. These locking mechanisms prevent multiple hosts from concurrently writing to the metadata and ensure that no data corruption occurs. This type of locking is called distributed locking.

To counter the situation of Esxi host crashing, distributed locks are implemented as lease based. An Esxi host that holds lock on a datastore has to renew the lease for the lock time and again and via this Esxi host lets the storage know that he is alive and kicking. If an Esxi host has not renewed the locking lease for some time, then it means that host is probably dead.

If the current owner of the lock does not renew the lease for a certain period of time, another host can break that lock. If an ESXi host wants to access a file that was locked by some other host, it looks for the time-stamp of that file and if it finds that the time-stamp has not been increased for quite a bit of time, it can remove the stale locks and can place its own lock.

What Happens When a Host Crashes?

When an Esxi host is installed and configured, it is assigned a unique ID, which is known as host UUID. When an ESXi host first mounts a VMFS volume, it is assigned a slot within the VMFS heartbeat region, where the host writes its own heartbeat record. This record is associated with the host UUID.

When an ESXi host in a HA cluster fails/crashes, it might leave behind stale locks on the VMFS datastore. These stale locks can prevent HA from restarting the failed VM’s on surviving hosts in the cluster. For this operation to succeed, the host attempting to power on the VM does the following:

1. It checks the heartbeat region of the datastore for the lock owner’s ID.

2. A few seconds later, it checks to see if this host’s heartbeat record was updated. Because the lock owner crashed, it is not able to update its heartbeat record.

3. The recovery host ages the locks left by this host. After this is done, other hosts in the cluster do not attempt to break the same stale locks.

4. The recovery host replays the heartbeat’s VMFS journal to clear and then acquire the locks.

5. When the crashed host is rebooted, it clears its own heartbeat record and acquires a new one (with a new generation number). As a result, it does not attempt to lock its original files because it is no longer the lock owner.

There are two types of locking mechanisms that are supported in VMFS filesystem:

- SCSI reservations (aka SCSI-2 Reservations or SCSI-Reserve and Release)

- Primitive ATS (or Hardware assisted locking)

Primitive ATS (or Hardware assisted locking)

ATS-only is used on all newly formatted VMFS5 datastores if the underlying storage supports it and supports discrete locking per disk. SCSI reservation locking mechanism is never used for those datastores. When a VMFS5 volume is created on a LUN located on a storage array that supports ATS primitive, after an ATS operation is attempted successfully, the ATS Only attribute is written to the volume. From that point on, any host sharing the volume always uses ATS.

ATS+SCSI Mechanism (Atomic Test & Set)

A VMFS datastore that supports the ATS+SCSI mechanism is configured to use ATS and attempts to use it when possible. If ATS fails, the VMFS datastore reverts to SCSI reservations.

SCSI reservations are used on storage devices that do not support hardware acceleration. The SCSI reservations lock an entire storage device while an operation that requires metadata protection is performed. After the operation completes, VMFS releases the reservation and other operations can continue.

Because this lock is exclusive, excessive SCSI reservations by a host can cause performance degradation on other hosts that are accessing the same VMFS.

How ATS locking works?

1. The ESXi host acquires an on-disk lock for a specific VMFS resource or resources.

2. It reads the block address on which it needs to write the lock record on the array.

3. If the lock is free, it atomically writes the lock record.

4. If the host receives an error—because another host may have beaten it to the lock—it retries the operation.

5. If the array returns an error, the host falls back to using a standard VMFS locking mechanism, using SCSI-2 reservations.

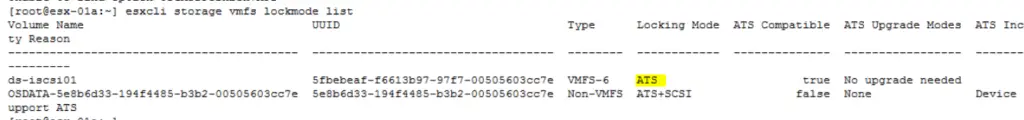

To display information related to VMFS locking mechanisms, run the command;

esxcli storage vmfs lockmode list

or

esxcli system settings advanced list -o /VMFS3/UseATSForHBonVMFS

Upgrade SAN

With the above done, it’s now time for the san array. The San upgrade releases the ISCSI locks from the lun level.

Reboot Hosts

Post the san upgrade, an HBA rescans won’t work so all the affected ESXi hosts will need a reboot to pick up the not consumed ISCI datastore. This reboot is necessary to release the ATS locking from the san.