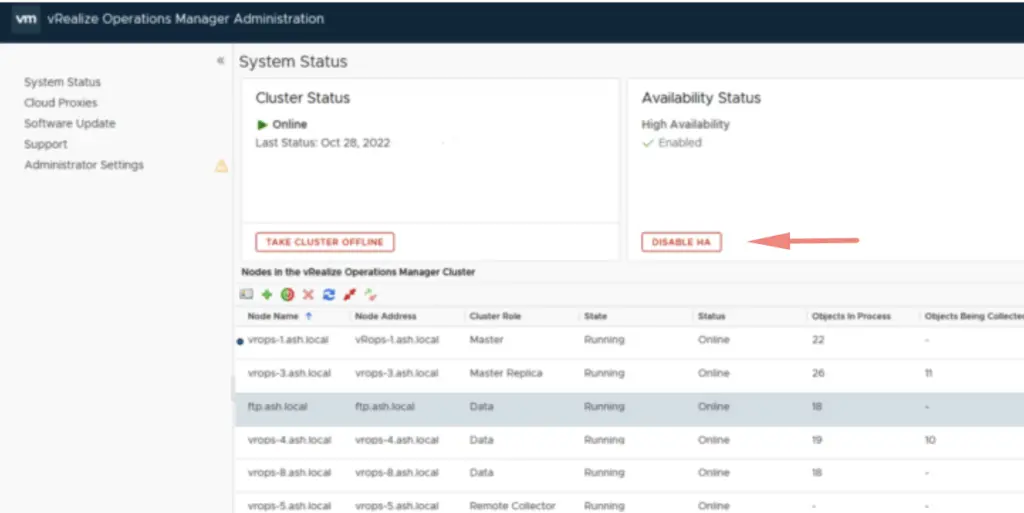

Disabling HA is a prerequisite so we have done that in the previous blog, and we will now start enabling continuous availability on our vRops now that our environment is massive. While HA offers what we need in terms of losing a node, the CA ensure that I ever there was a fault domain failure, the vROps cluster will still be operational however in a degraded mode. Both HA and CA can’t be enabled at the same time. The vRops Cluster will need to go offline during the CA configuration so plan accordingly.

In case of a Fault Domain failure, the remaining active Fault Domain and the Witness Node will keep the cluster alive by promoting the Replica Node as the new Master Node. The cluster will run in degraded mode.

In this environment, I’ve got my nodes under a single vCenter however, data Nodes must be split into different vSphere clusters to ensure they are under two different fault domains.

The v`Rops CA Configuration

To implement vRealize Operations’ continuous availability, we require the following prerequisites in place

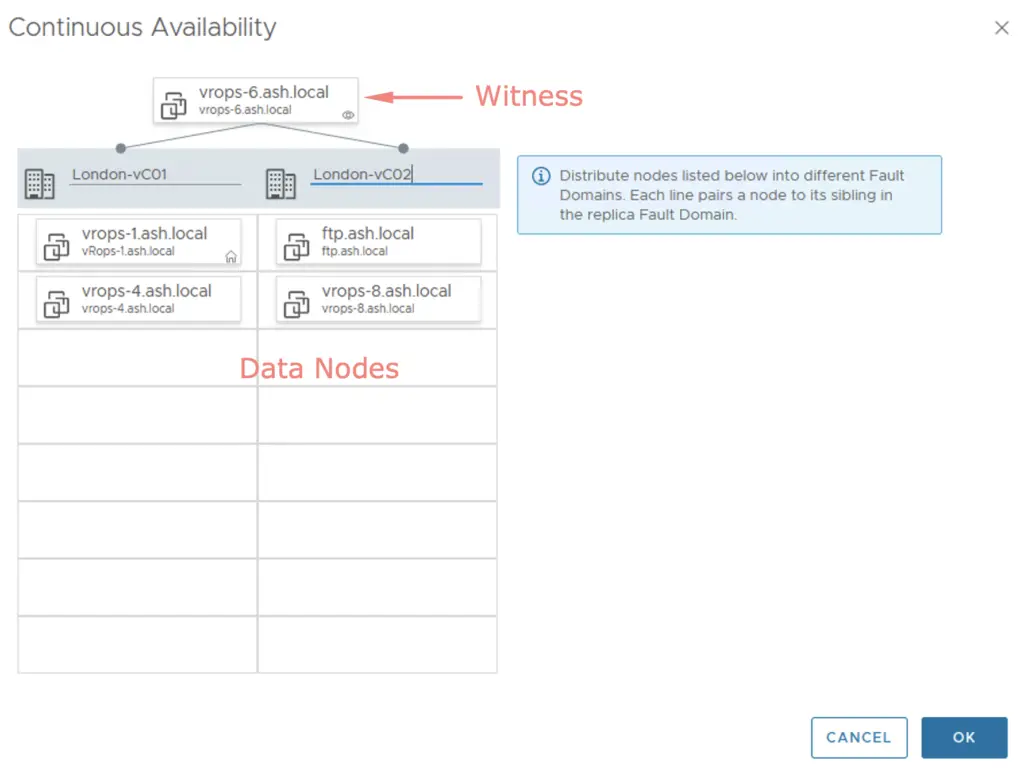

- Master Node – Assign the master node to fault domain 1.

- Replica Master Node – The replica node is a copy of the master node and this will need to be moved to fault domain 2.

- Witness Node – The witness node should be deployed in a 3rd site ideally and should be working. This act as a quorum node just to prevent a split-brain scenario

- Data Node – In order for data to be divided evenly across the fault domain, we will need to ensure there is an equal number of data nodes on both fault domains.

- Remote Collectors – they don’t need to be part of fault domains

In my lab, I have these nodes deployed – 2 of my data nodes will go into Fault domain 1 & the other two will go into Fault domain 2. The witness can be anywhere.

| Role | Type | Fault Domain |

| vRops-1 | Master | Fault domain 1 |

| vRops-4 | Data Node | Fault domain 1 |

| vRops-6 | Witness | |

| vRops-7/ftp | Data Node | Fault domain 2 |

| vRops-8 | Data Node | Fault domain 2 |

Once logged in to the admin UI, click Enable CA button to proceed with the Continuous Availability configuration.

The master node by default is placed in fault domain 1 & we do have options to spread our data collectors as pairs. I’ve placed 2 of my data nodes in London vCenter 1 and the other two in London vCenter 2 showing these nodes are deployed in separate sites. Click OK to proceed.

The witness is the tie-breaker and my data nodes are placed evenly across my two vCenters. Once this is defined, click OK

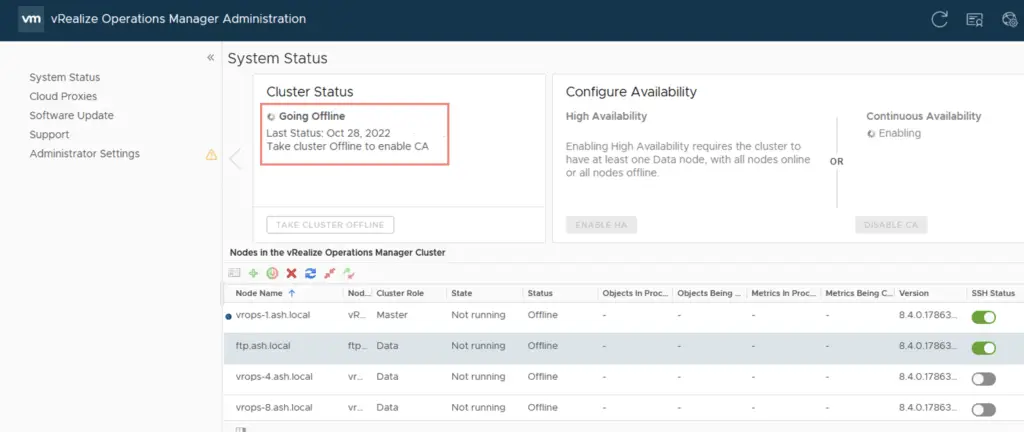

vROps cluster will go offline during this process

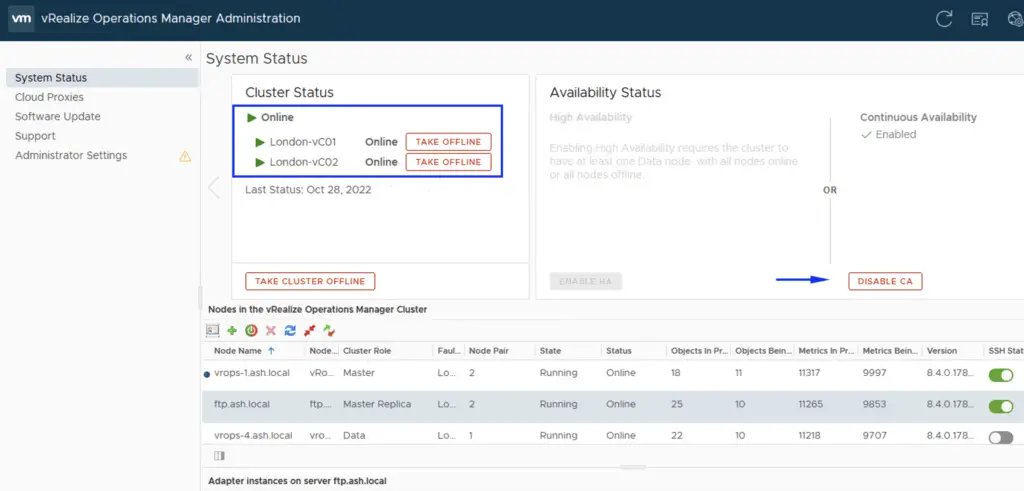

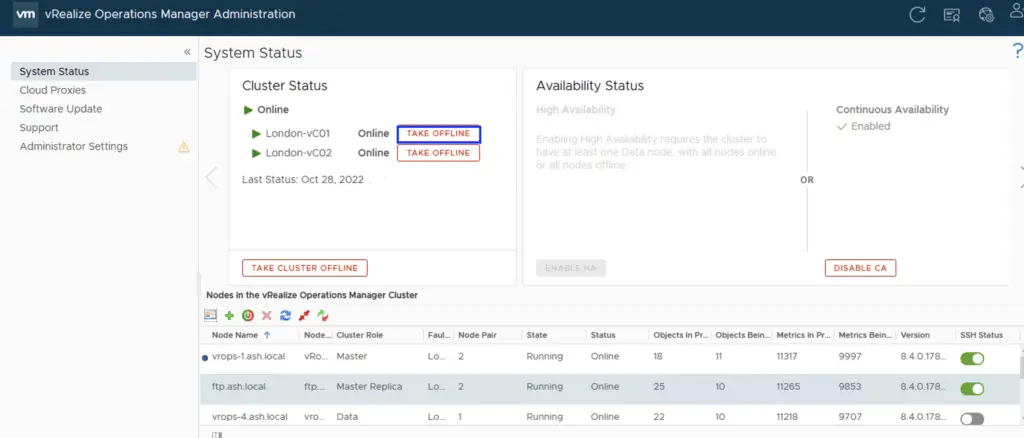

After a few minutes, the continuous Availability feature is enabled.

Simulating a DR scenario of London-VC01

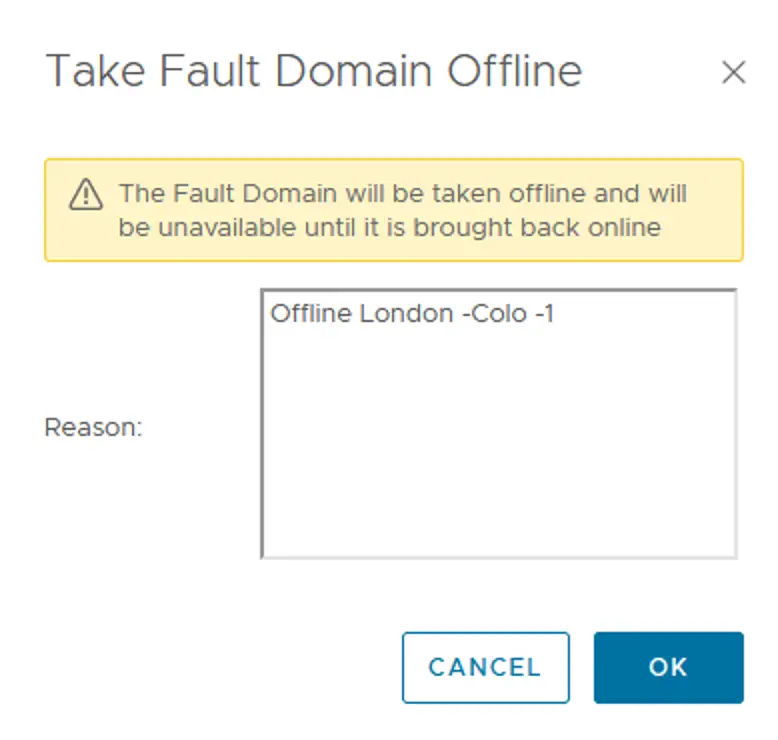

Lets hit take offline on our London VC-1 which represents our London-Colo-1

Click OK to continue

Our London-vC01 site is now offline and as expected, we are running under degraded mode on our Cluster but our vROps will still be active

As per the above example, my master node is ftp.ash.local and i can get to our vRops cluster.