The three options for Redhat-based virtualization solutions are OpenShift, OpenStack Platform and converting an existing Rhel 9 OS to a virtualization host.

An RHEL 9 OS can be converted into a hypervisor host which is the same as our ESXi OS from VMware which allows us to multiple operating systems on a host machine. This used to be known as the RHEV-H, the hypervisor of the RHEV platform and it is a bare-metal hypervisor which used to host virtual machines. RHEVM is the management system of the environment which controls the environment hypervisors. It’s also used to create, migrate, modify, and control virtual machines hosted by hypervisors

This blog will focus on converting a Redhat 9.2 OS to a hypervisor host.

Limitations of Redhat Virtualization

A few of the limitations of using RHEV are below.

- RHEV does not support creating KVM virtual machines in any type of container. To create VMs in containers, we use OpenShift Virtualization

- No vCPU hot unplug / Memory hot unplug / QEMU-side I/O throttling / No Storage vMotion

- No Snapshots of a Powered on VM

- Windows Cluster is not supported in RHEL 9

- No Incremental live backup

- No NVme Support / InfiniBand devices

- Guest operating system limitations

- Unsupported CPU Architecture

- Virtualization Limits

Supported Guest OS List

The following is the list of guest operating systems that are certified and supported with RHEV

Components of Redhat Virtualization

QEMU – The emulator simulates a complete virtualized hardware platform that the guest operating system can run in and manages how resources are allocated on the host and presented to the guest.

Libvirt software suite serves as a management and communication layer, making QEMU easier to interact with, enforcing security rules, and providing a number of additional tools for configuring and running VMs.

Prerequisites of Redhat Virtualization

- Enable Virtualization mode on the Host

- Install RHEL 9 on the VM with 4 vCPU, 2GB vRAM, and 6 GB space for each VM

- Install the following packages on the VM

- Shared Storage Disk Mapping across all RHEV hosts.

Summary of Installation Packages for RHEV

| dnf install qemu-kvm libvirt virt-install virt-viewer cockpit cockpit-machines virt-manager libguestfs-tools-c -y | Install Hypervisor Packages |

dnf install virtio-win edk2-ovmf, swtpm libtpms | Install Packages for Windows OS support |

| systemctl start cockpit.socket && systemctl enable cockpit.socket | Cockpit Service and UI |

| for drv in qemu network nodedev nwfilter secret storage interface; do systemctl start virt${drv}d{,-ro,-admin}.socket; done | Start All Hypervisor Services |

| dnf info libvirt-daemon-config-network | System daemons |

| systemctl start virtqemud.service systemctl start virtqemud.socket systemctl start libvirtd.service systemctl enable libvirtd.service systemctl status libvirtd.service | System daemons |

| /var/lib/libvirt/images | ISO location for OS Images |

| virt-install –osinfo list | List all supported OS |

Verify if your system is prepared to be a virtualization host

Check if the host CPU supports Linux VM Virtualization. If we get an output, CPU is compatible with Red Hat Virtualization.

root@rhev-01 ~]# grep -e 'vmx' /proc/cpuinfo

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 syscall nx rdtscp lm constant_tsc arch_perfmon nopl xtopology tsc_reliable nonstop_tsc cpuid tsc_known_freq pni pclmulqdq vmx ssse3 cx16 sse4_1 sse4_2 x2apic popcnt tsc_deadline_timer aes hypervisor lahf_lm pti ssbd ibrs ibpb stibp tpr_shadow vnmi ept vpid tsc_adjust arat flush_l1d arch_capabilities

vmx flags : vnmi invvpid ept_x_only tsc_offset vtpr mtf ept vpid unrestricted_guest ple

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 syscall nx rdtscp lm constant_tsc arch_perfmon nopl xtopology tsc_reliable nonstop_tsc cpuid tsc_known_freq pni pclmulqdq vmx ssse3 cx16 sse4_1 sse4_2 x2apic popcnt tsc_deadline_timer aes hypervisor lahf_lm pti ssbd ibrs ibpb stibp tpr_shadow vnmi ept vpid tsc_adjust arat flush_l1d arch_capabilities

vmx flags : vnmi invvpid ept_x_only tsc_offset vtpr mtf ept vpid unrestricted_guest ple

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 syscall nx rdtscp lm constant_tsc arch_perfmon nopl xtopology tsc_reliable nonstop_tsc cpuid tsc_known_freq pni pclmulqdq vmx ssse3 cx16 sse4_1 sse4_2 x2apic popcnt tsc_deadline_timer aes hypervisor lahf_lm pti ssbd ibrs ibpb stibp tpr_shadow vnmi ept vpid tsc_adjust arat flush_l1d arch_capabilities

vmx flags : vnmi invvpid ept_x_only tsc_offset vtpr mtf ept vpid unrestricted_guest ple

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 syscall nx rdtscp lm constant_tsc arch_perfmon nopl xtopology tsc_reliable nonstop_tsc cpuid tsc_known_freq pni pclmulqdq vmx ssse3 cx16 sse4_1 sse4_2 x2apic popcnt tsc_deadline_timer aes hypervisor lahf_lm pti ssbd ibrs ibpb stibp tpr_shadow vnmi ept vpid tsc_adjust arat flush_l1d arch_capabilities

vmx flags : vnmi invvpid ept_x_only tsc_offset vtpr mtf ept vpid unrestricted_guest ple

[root@rhev-01 ~]#

Check if KVM is loaded

[root@rhev-01 ~]# lsmod | grep kvm

kvm_intel 409600 0

kvm 1134592 1 kvm_intel

irqbypass 16384 1 kvmInstall KVM Virtualization Packages

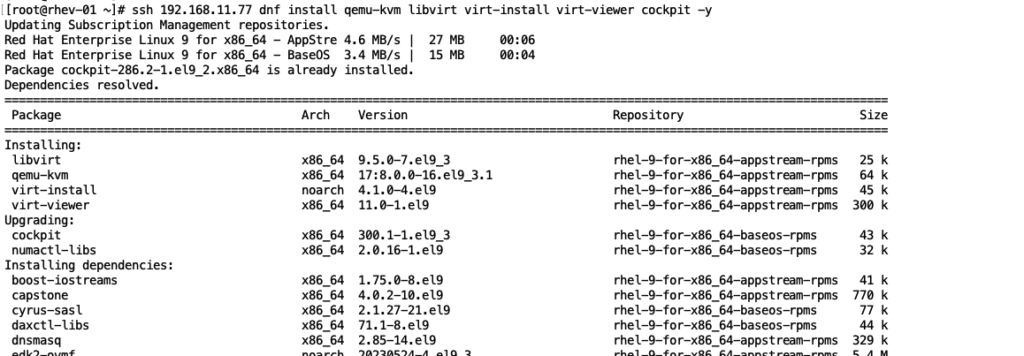

ssh 192.168.11.77 dnf install qemu-kvm libvirt virt-install virt-viewer virt-manager cockpit cockpit-machines libguestfs-tools-c -y

Enable the Libvertd Daemon & enable the Libvirtd service to start at the system boot

[root@rhev-02 ~]# systemctl enable libvirtd && systemctl start libvirtd

Created symlink /etc/systemd/system/multi-user.target.wants/libvirtd.service → /usr/lib/systemd/system/libvirtd.service.

Created symlink /etc/systemd/system/sockets.target.wants/libvirtd.socket → /usr/lib/systemd/system/libvirtd.socket.

Created symlink /etc/systemd/system/sockets.target.wants/libvirtd-ro.socket → /usr/lib/systemd/system/libvirtd-ro.socket.

[root@rhev-02 ~]#

Enable IP forwarding

Next, we will need to configure the network for IP Forwarding for NAT to work.

echo "net.ipv4.ip_forward = 1" | tee /etc/sysctl.conf# sysctl -p Enable IOMMU

Verify that your system is prepared to be a Redhat virtualization host by running the command virt-host-validate

[root@rhev-01 ~]# virt-host-validate

QEMU: Checking for hardware virtualization : PASS

QEMU: Checking if device /dev/kvm exists : PASS

QEMU: Checking if device /dev/kvm is accessible : PASS

QEMU: Checking if device /dev/vhost-net exists : PASS

QEMU: Checking if device /dev/net/tun exists : PASS

QEMU: Checking for cgroup 'cpu' controller support : PASS

QEMU: Checking for cgroup 'cpuacct' controller support : PASS

QEMU: Checking for cgroup 'cpuset' controller support : PASS

QEMU: Checking for cgroup 'memory' controller support : PASS

QEMU: Checking for cgroup 'devices' controller support : PASS

QEMU: Checking for cgroup 'blkio' controller support : PASS

QEMU: Checking for device assignment IOMMU support : PASS

QEMU: Checking if IOMMU is enabled by kernel : WARN (IOMMU appears to be disabled in kernel. Add intel_iommu=on to kernel cmdline arguments)

QEMU: Checking for secure guest support : WARN (Unknown if this platform has Secure Guest support)We have a warning above that shows IOMMU is not enabled.

What is IOMMU in Linux?

IOMMU (Input/Output Memory Management Unit) is a feature of modern CPUs that allows multiple devices to share a single physical address space assisting the base operating system in mapping physical and virtual memory addresses to manage resources efficiently. As we have a single host, and multiple Virtual machines running on it on the same hardware, every VM running on it has its own virtual address space that accesses memory from other VMs

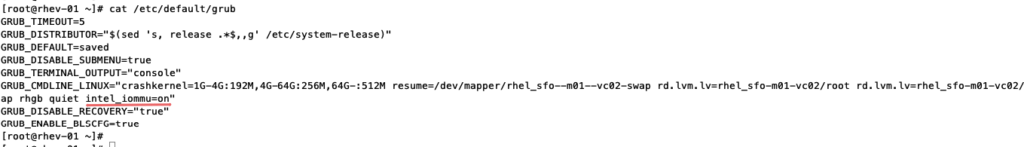

Updating Grub boot loader on an RHEL to allow IOMMU

Edit the /etc/default/grub and add intel_iommu=on to the end of the GRUB_CMDLINE_LINUX line

Type the following command as the root user to regenerate grub config

sudo grub2-mkconfig -o /boot/grub2/grub.cfg

Reboot the VM

rebootVerify that your system is prepared to be a Redhat virtualization host by running the command virt-host-validate

[root@rhev-01 ~]# virt-host-validate

QEMU: Checking for hardware virtualization : PASS

QEMU: Checking if device /dev/kvm exists : PASS

QEMU: Checking if device /dev/kvm is accessible : PASS

QEMU: Checking if device /dev/vhost-net exists : PASS

QEMU: Checking if device /dev/net/tun exists : PASS

QEMU: Checking for cgroup 'cpu' controller support : PASS

QEMU: Checking for cgroup 'cpuacct' controller support : PASS

QEMU: Checking for cgroup 'cpuset' controller support : PASS

QEMU: Checking for cgroup 'memory' controller support : PASS

QEMU: Checking for cgroup 'devices' controller support : PASS

QEMU: Checking for cgroup 'blkio' controller support : PASS

QEMU: Checking for device assignment IOMMU support : PASS

QEMU: Checking if IOMMU is enabled by kernel : PASS

QEMU: Checking for secure guest support : WARN (Unknown if this platform has Secure Guest support)

[root@rhev-01 ~]# OneLiners and Cheat Sheet

# virt-host-validate

PASS value, your system is prepared for creating VMs.

virsh net-list --all

Verify that the libvirt default network is active and configured to start automatically:

virsh net-autostart default

Network default marked as autostarted

# virsh net-start default

Network default started

For a local VM, use the virsh start utility.

virsh start demo-guest1

For a remote VM, use the virsh start utility.

# virsh -c qemu+ssh://root@192.168.123.123/system start demo-guest1

View the VM configuration

virsh dominfo demo-guest1

For information about a VM’s disks and other block devices:

# virsh domblklist testguest3

To obtain more details about the vCPUs of a specific VM:

# virsh vcpuinfo testguest4

o obtain information about a VM’s file systems and their mountpoints:

# virsh domfsinfo testguest3

To list all virtual network interfaces on your host:

# virsh net-list --all

Name State Autostart Persistent

---------------------------------------------

default active yes yes

labnet active yes yes

For show all VM on a host

virsh list --all

For a local VM, use the virsh shut utility.

# virsh shutdown demo-guest1

For a remote VM, use the virsh start utility.

# virsh -c qemu+ssh://root@10.0.0.1/system shutdown demo-guest1

That’s it to redhat virtualization on RHEL 9 so now you have an understanding of this which will hopefully help you on the upcoming blog on OpenShift.