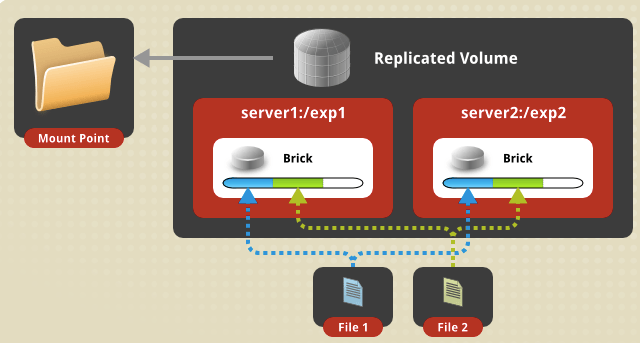

In a distributed volume files are spread randomly across the bricks(disks) in the volume so this offers us the redundancy needed should the second server crash.

Blog Series

Create a new directory for GlusterFS on all our hosts with the command

oot@gluster02:~# sudo mkdir -p /glusterfs/volume01

root@gluster02:~# ls -ld /glusterfs/

drwxr-xr-x 3 root root 22 Nov 17 18:49 /glusterfs/

root@gluster02:~# ls -ld /glusterfs/volume01/

drwxr-xr-x 2 root root 6 Nov 17 18:49 /glusterfs/volume01/

root@gluster02:~#

Create a Gluster FS Volume

gluster volume create dockervol replica 3 transport tcp gluster01:/glusterfs/volume01 gluster02:/glusterfs/distributed gluster3:/glusterfs/distributedStart the volume

sudo gluster volume start dockervolVerify the Gluster volume info

root@gluster01:/glusterfs# gluster volume info

Volume Name: dockervol

Type: Distribute

Volume ID: e6eec327-8902-4d81-a9e0-5594678722af

Status: Started

Snapshot Count: 0

Number of Bricks: 1

Transport-type: tcp

Bricks:

Brick1: gluster03:/glusterfs/volume01

Options Reconfigured:

transport.address-family: inet

storage.fips-mode-rchecksum: on

nfs.disable: on

root@gluster01:/glusterfs#

root@gluster01:/glusterfs#

Mount the GlusterFS

With all this in place, we can test the GlusterFS distributed file system. On gluster01, gluster02, and gluster03 issue the command to mount the GlusterFS volume to a mount point such as /mnt.

root@gluster01:/# mount -t glusterfs gluster01:/dockervol /mnt

root@gluster01:/# ssh gluster02 mount -t glusterfs gluster02:/dockervol /mnt

root@gluster01:/# ssh gluster03 mount -t glusterfs gluster03:/dockervol /mntManaging Trusted Storage Pools

To list all nodes in the TSP:

root@gluster01:~# gluster pool list

UUID Hostname State

7f356799-1049-4c0b-a629-f4eafe610493 gluster02 Connected

4eb96e86-a0ab-4f2b-997e-34d18ae96c2c gluster03 Connected

2e6f3f80-ba95-4613-9144-c15f215f4550 localhost Connected

root@gluster01:~#

Viewing Peer Status

root@gluster01:~# gluster peer status

Number of Peers: 2

Hostname: gluster02

Uuid: 7f356799-1049-4c0b-a629-f4eafe610493

State: Peer in Cluster (Connected)

Hostname: gluster03

Uuid: 4eb96e86-a0ab-4f2b-997e-34d18ae96c2c

State: Peer in Cluster (Connected)

root@gluster01:~#

Accessing Data – Setting Up GlusterFS Client

There are several ways you can mount the GlusterFS – ( NFS, CIFS, and Gluster FS ) are all supported. In this demo, we will just stick with the default glusterfs client.

root@gluster04:~# sudo apt install glusterfs-client -y

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following additional packages will be installed:

attr glusterfs-common ibverbs-providers libgfapi0 libgfchangelog0 libgfrpc0 libgfxdr0 libglusterfs0 libibverbs1 libnl-route-3-200 librdmacm1 libtirpc-common libtirpc3

python3-prettytable

Mount the distributed file system with the command:

root@gluster04:~# mount -t glusterfs gluster01:/dockervol /mnt/glusterfs/Automatically Mounting Volumes

You can configure your system to automatically mount the Gluster volume each time your system starts.

root@gluster04:~# tail -2 /etc/fstab

gluster01:/dockervol /mnt/glusterfs glusterfs defaults,_netdev 0 0Testing the Filesystem

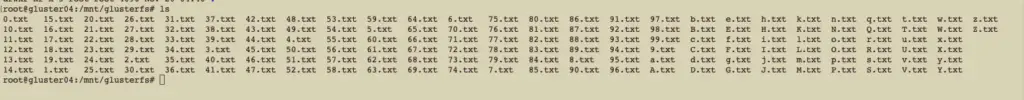

With all this in place, i will just put a small for loop to create several files from my client

for f in {a..z} {A..Z} {0..99}

do

echo hello > "$f.txt"

done

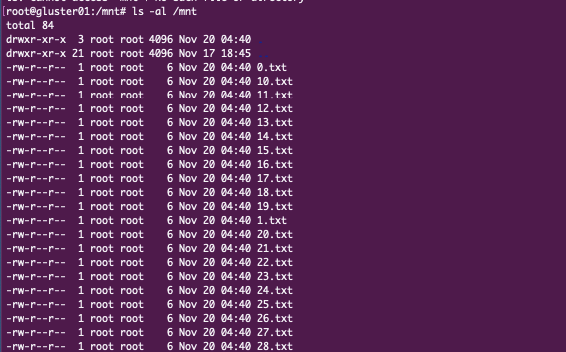

Verification from Gluster level

I can see all my files on al my cluster nodes

GlusterFS distributed file system is finally up and running.